This notebook was created by Jean de Dieu Nyandwi for the love of machine learning community. For any feedback, errors or suggestion, he can be reached on email (johnjw7084 at gmail dot com), Twitter, or LinkedIn.

Intro to TensorFlow for Deep Learning¶

Contents¶

1. What is TensorFlow¶

TensorFlow is an open source and an end to end platform used for building machine learning models. Being end to end, you can prepare data, build models, diagnose, improve, and deploy them.

TensorFlow uses Keras at its backend. Keras is a well beautifully designed API for building deep learning models in popular fields such as Computer Vision and Natural Language Processing.

TensorFlow has got a strong community, from users, learning resources and whole range of technical supports. Not only it powers majority of Google apps such as YouTube, Maps and Google Photos, it is also widely used across startups and other big techs. If you would like to know who is using TensorFlow, here you go!.

2. TensorFlow Model APIs¶

TensorFlow being suited for variety of tasks, there are 3 ways to build deep learning models.

2.1 Sequential API¶

This is the simplest model building option. When building a model, you start from the input to the output, no other way around. This API is suited for tasks that don't require multiple inputs or outputs, or skip connections.

Sequential API can be a good API to use for things like image classification or regression tasks. With things like object detection and segmentation, we need another API, which is Functional API.

Below is how a sequential model looks like.

# Building a sequential model

from tensorflow import keras

model = keras.models.Sequential([

keras.layers.Dense(16, activation='relu'),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dense(1, activation='sigmoid')

])Functional API¶

This type of API makes it easy to build models that can take multiple inputs/outputs, or skip connections.

It is well suited in advanced things like object detection and segmentation. In object detection, there are two main involved things.

One is recognizing the object(classification) and other is localizing the object(regression: predicting the bounding boxes coordinates).

You can't build an object detection model with Sequential API, but Functional API instead!! Source.

You can't build an object detection model with Sequential API, but Functional API instead!! Source.

Below is how a functional model looks like.

# Building a same model in Functional API

from tensorflow import keras

inputs = keras.Input()

x = keras.layers.Dense(16, activation='relu')(inputs)

x = keras.layers.Dense(32, activation='relu')(x)

output = keras.layers.Dense(1, activation='sigmoid')(x)

model = keras.Model(inputs, output)Model SubClassing¶

SubClassing API is for building custom models and having full control of every step in model building and training.

In most cases, Sequential and Functional API will be all you need to build almost anything.

Below is how a subclassing model looks like.

# Building a same custom model

from tensorflow import keras

class MLP(keras.Model):

def __init__(self, **kwargs):

super(MLP, self).__init__(**kwargs)

self.dense_1 = keras.layers.Dense(16, activation='relu')

self.dense_2 = keras.layers.Dense(32, activation='relu')

self.dense_3 = keras.layers.Dense(1, activation='sigmoid')

def call(self, inputs):

x = self.dense_1(inputs)

x = self_dense_2(x)

x = self_dense_3(x)

return x

# Instantiate the model

mlp = MLP()3. A Quick Tour into TensorFlow Ecosystem¶

Beside community, one of my favourite things I like about TensorFlow is its whole ecosystem of tools and resources. Below is a list of tools, libraries, and resources that are built to simplify things.

Tools¶

1. Colaboratory¶

Colaboratory is a free web version of jupyter notebook that doesn't require any set up. You can import almost any library without having to install it.

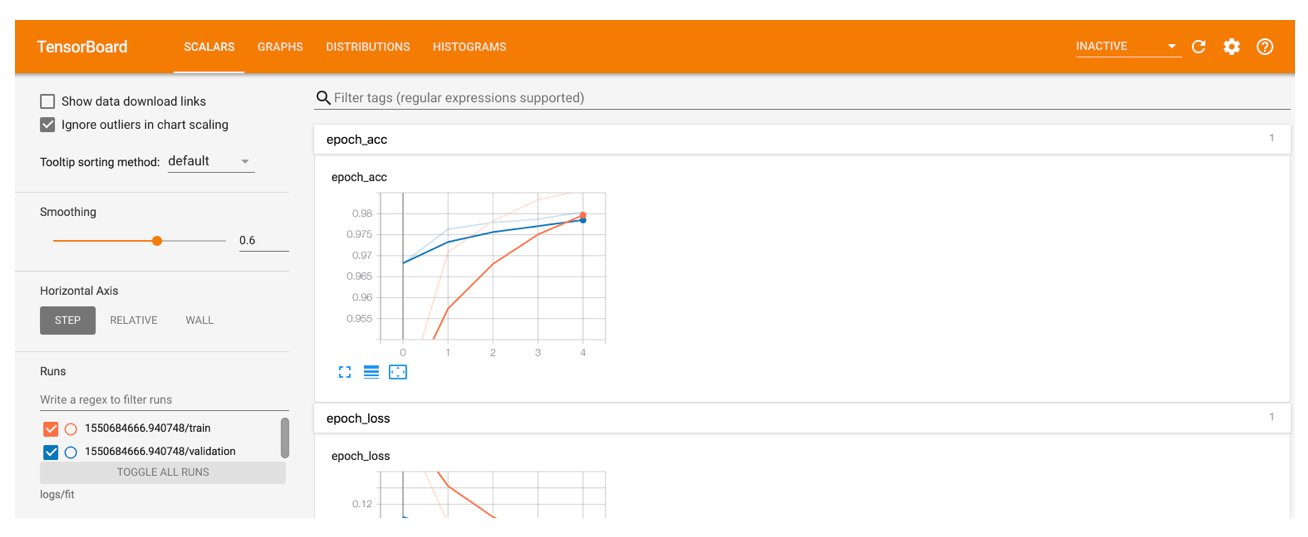

2. TensorBoard¶

TensorBoard provides the engineers and scientists with the visualizations and debugging capabilities for their Machine Learning models.

With TensorBoard, you can:

- Track and visualize the loss and accuracy metrics

- View the weights, biases, and other parameters in plots format such as histogram or line plot

- Display image, audio, and text data

- See which hyperparameters might be a good match for the model to converge

3. What If Tool(WIT)¶

What if you wanted a visual way to understand your data and model within your environment? WIT is exactly designed for that purpose. It can help you to understand your dataset and the output of your model.

Models and Datasets¶

1. TensorFlow Hub¶

TensorFlow Hub is a collection of ready to use models in variety of tasks, from image recognition, object detection, image segmentation, sound recognition, text classification, etc..

2. Model Garden¶

Model Garden is an official GitHub repository of TensorFlow models, build with high-level APIs. The models available are Computer Vision, Natural Language Processing, and recommendation.

3. TensorFlow Datasets (TFDS)¶

TFDS contains datasets of different types, from images, texts, video, to sounds...We will be using many TFDS datasets in our deep learning quest.

There are so many tools and functionalities that are provided by TensorFlow. As much as we can, we will try to leverage these tools.

This was a quick walkthrough the TensorFlow ecosystem. If you would like to learn more, go to tensorflow website or check out this article that I wrote a while back.

Models Deployment¶

1. TensorFlow Extended (TFX)¶

TFX is an end to end platform for creating machine learning pipelines. With TFX, you can prepare data, train models, validate them, and deploy them in production environments.

2. TensorFlow.js¶

TensorFlow.js makes it easy to train and deploy model in web browsers.

3. TensorFlow Lite¶

TF Lite makes it possible to train, deploy and optimize models in mobile devices and microcontrollers.

4. The Basics of Tensors¶

4.1 Intro to Tensors¶

A tensor is a multidimensional array of the same data type. A tensor can be a scalar (single number), a vector, or a matrix.

If you have used NumPy, tensors are like NumPy arrays, except that tensors have GPU(Graphical Processing Unit) support.

A typical tensor has the following information:

- Shape: The length or number of elements of each of the tensor dimension/axes.

- Rank: The number of dimensions/axes in a tensor. A scalar tensor (a single number) has rank 0, a vector has a rank 1 (a vector is a 1D), and a matrix has rank 2 (or 2D).

- Axis/Dimension: This is a particular dimension of a tensor

- Size: This is the total number of items in the tensor.

But why tensor/NumPy array things?

Well, almost all types of data ca be represented as an array of numbers. Take an example:

- Image can be represented as an array of pixels.

- Any text data can be converted into an array of numbers (or tokens representing words)

- Video (made of sequence of images) can be represented as an array of numbers.

Having the ability to convert these raw data into tensors/arrays make it easy to preprocess it, either when performing conventional numerical computations or when it is the data we are preparing to feed to a machine learning model. Take a simple example, we can not feed a raw text to a machine learning model. That text has to be converted into numbers.

That's is for the basic intro to bensors. For more about tensors, check out TensorFlow's introduction to tensors, or this rich wikipedia tensor page.

In later parts, we will see how to:

- Create a tensor with tf.constant()

- Create a tensor with tf.variable()

- Create tensors from existing functions

- Select data in a tensor

- Perform operations in tensor

- Manipulate tensor shape

4. 2 Creating a Tensor with tf.constant()¶

A Tensor can be a scalar, a vector or a matrix.

Let's use tf.constant() to create these tensor types.

A tensor created with tf.constant() is immutable.

# I will first import tensorflow as tf

# Also import numpy as np

# If you are using Colab, no need to install them

import tensorflow as tf

import numpy as np

# Creating a scalar tensor

# You can specify dtype but TF will detect its if left unspecified

scalar_tensor = tf.constant(10)

# Displaying created tensor

print(scalar_tensor)

tf.Tensor(10, shape=(), dtype=int32)

# We can also create a vector or rank 1 tensor

# Simply put, a vector is one dimensional

# We can create it from a list of values

vect_tensor = tf.constant([1.0,2.0,3.0,4.0,5.0,6.0])

print(vect_tensor)

tf.Tensor([1. 2. 3. 4. 5. 6.], shape=(6,), dtype=float32)

A vector of 1 dimensional values was created. As you can see, the data type is float32 because the values were floats. TensorFlow detects that automatically from values if the data type was not mentioned.

Let's now create a tensor with rank 2 or two dimensions. This is actually a matrix.

mat_tensor = tf.constant([[2,4],

[6,8],

[10,12]], dtype=tf.int32)

print(mat_tensor)

tf.Tensor( [[ 2 4] [ 6 8] [10 12]], shape=(3, 2), dtype=int32)

If you can see in the displayed tensor above, the shape is (3,2) which means our tensor has 3 rows and 2 columns.

You can also check the number of dimensions or axes of a tensor using tensor_name.ndim

scalar_tensor.ndim

0

A scalar tensor does not have any dimension. It's just a single value. But if we do the same thing for a vector or matrix, you will see something different.

# A vector has 1 dimension

vect_tensor.ndim

1

# A matrix has 2D or more dimensions

mat_tensor.ndim

2

Just like NumPy array, a tensor can have many dimensions. Let's create a tensor with 3 dimensions.

tensor_3d = tf.constant([

[[1,2,3,4,5],

[6,7,8,9,8]],

[[1,3,5,7,9],

[2,4,6,8,1]],

[[1,2,3,5,4],

[3,4,5,6,7]], ])

print(tensor_3d)

tf.Tensor( [[[1 2 3 4 5] [6 7 8 9 8]] [[1 3 5 7 9] [2 4 6 8 1]] [[1 2 3 5 4] [3 4 5 6 7]]], shape=(3, 2, 5), dtype=int32)

tensor_3d.ndim

3

A tensor can be converted into NumPy array by calling tensor_name.numpy or np.array(tensor_name).

TensorFlow plays well with NumPy. And if not yet done, TensorFlow recently posted that they are working on gettint the whole of NumPy into TensorFlow.

# Converting a tensor into a NumPy array

n_array = tensor_3d.numpy()

n_array

array([[[1, 2, 3, 4, 5],

[6, 7, 8, 9, 8]],

[[1, 3, 5, 7, 9],

[2, 4, 6, 8, 1]],

[[1, 2, 3, 5, 4],

[3, 4, 5, 6, 7]]], dtype=int32)

# Using np.array(tensor_name)

np.array(tensor_3d)

array([[[1, 2, 3, 4, 5],

[6, 7, 8, 9, 8]],

[[1, 3, 5, 7, 9],

[2, 4, 6, 8, 1]],

[[1, 2, 3, 5, 4],

[3, 4, 5, 6, 7]]], dtype=int32)

4. 3 Creating a Tensor with tf.Variable()¶

A tensor created with tf.constant() is immutable, it can not be changed. Such kind of tensor can not be used as weights in neural networks because they need to be changed/updated in backpropogation for example.

With tf.Variable(), we can create tensors that can be mutable and thus can be used in things like updating the weights of neural networks like said above.

Creating variable tensor is as simple as the former.

var_tensor = tf.Variable([

[[1,2,3,4,5],

[6,7,8,9,8]],

[[1,3,5,7,9],

[2,4,6,8,1]],

[[1,2,3,5,4],

[3,4,5,6,7]], ])

print(var_tensor)

<tf.Variable 'Variable:0' shape=(3, 2, 5) dtype=int32, numpy=

array([[[1, 2, 3, 4, 5],

[6, 7, 8, 9, 8]],

[[1, 3, 5, 7, 9],

[2, 4, 6, 8, 1]],

[[1, 2, 3, 5, 4],

[3, 4, 5, 6, 7]]], dtype=int32)>

It can also be converted to NumPy array, just like tensors created with tf.constant()

# Converting a variable tensor into NumPy array

var_tensor.numpy()

array([[[1, 2, 3, 4, 5],

[6, 7, 8, 9, 8]],

[[1, 3, 5, 7, 9],

[2, 4, 6, 8, 1]],

[[1, 2, 3, 5, 4],

[3, 4, 5, 6, 7]]], dtype=int32)

4. 4 Creating a Tensor from Existing Functions¶

There some types of uniform tensors that you would not want to create from scratch, when in fact, they are already built.

Take an example of 1's tensor, 0's, and random tensors. Let's create them.

# Creating 1's tensor

ones_tensor = tf.ones([4,4])

print(ones_tensor)

tf.Tensor( [[1. 1. 1. 1.] [1. 1. 1. 1.] [1. 1. 1. 1.] [1. 1. 1. 1.]], shape=(4, 4), dtype=float32)

# Creating 1's tensor

ones_tensor_1 = tf.ones([1,10])

print(ones_tensor_1)

tf.Tensor([[1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]], shape=(1, 10), dtype=float32)

# Creating zeros' tensor

tensor_0 = tf.zeros([3,3])

print(tensor_0)

tf.Tensor( [[0. 0. 0.] [0. 0. 0.] [0. 0. 0.]], shape=(3, 3), dtype=float32)

We can also create a tensor with random values. During the weights initialization in neural networks, weights take random values.

You might be thinking that why aren't we building neural networks now and I get you. Understanding the basics of tensors and especially working with TensorFlow is useful when it comes to creating custom neural network layers, loss functions, or optimizers.

The later labs will not deal with custom layers/losses/optimizers, this is only just an introduction, so that this can serve as a reference whenever you want to take a step back into the backbone of TensorFlow high level API.

# Generating a tensor with random values

# We first have to create a generator object

rand_tensor = tf.random.Generator.from_seed(3)

rand_tensor = rand_tensor.normal(shape=[3,3])

print(rand_tensor)

tf.Tensor( [[-0.43640924 -1.9633987 -0.06452483] [-1.056841 1.0019137 0.6735137 ] [ 0.06987712 -1.4077919 1.0278524 ]], shape=(3, 3), dtype=float32)

Changing seed number in tf.random.Generator.from_seed(3) will change the values returned by random function.

We can also shuffle the existing tensor, created with tf.constant() or tf.Variable().

# Create a typical tensor

example_tensor = tf.constant([[1,3],

[3,4],

[4,5]])

print(example_tensor)

tf.Tensor( [[1 3] [3 4] [4 5]], shape=(3, 2), dtype=int32)

def shuffle_tensor(tensor):

"""

Take a tensor as input and return the shuffled tensor

"""

# Shuffle the order of the created tensor

tensor_shuffled = tf.random.shuffle(tensor)

return print(tensor_shuffled)

shuffle_tensor(example_tensor)

tf.Tensor( [[4 5] [1 3] [3 4]], shape=(3, 2), dtype=int32)

If you rerun the above cell more than once, you will get different orders of tensor.

shuffle_tensor(example_tensor)

tf.Tensor( [[4 5] [3 4] [1 3]], shape=(3, 2), dtype=int32)

In order to prevent that, we can use tf.random.set_seed(seed_number) to always get the same order/values.

# Set seed

tf.random.set_seed(42)

shuffle_tensor(example_tensor)

tf.Tensor( [[3 4] [4 5] [1 3]], shape=(3, 2), dtype=int32)

Everytime you can run shuffle_tensor function with a same seed, you will get the same order.

You can learn more about Random number generation at TensorFlow docs.

4. 5 Selecting Data in Tensor¶

We can also select values in any tensor, both single dimensional tensor and multi dimensional tensor.

# Let's create a tensor

tensor_1d = tf.constant([1,2,3,4,5,6,7])

Let's select multiple values in tensor created above.

print('The first value:', tensor_1d[0].numpy())

print('The second value:', tensor_1d[2].numpy())

print('From the 3 to 5th values:', tensor_1d[3:5].numpy())

print('From the 3 to last value:', tensor_1d[3:].numpy())

print('The last value:', tensor_1d[-1].numpy())

print('Select value before the last value:', tensor_1d[-2].numpy())

print('Select all tensor values:', tensor_1d[:].numpy())

The first value: 1 The second value: 3 From the 3 to 5th values: [4 5] From the 3 to last value: [4 5 6 7] The last value: 7 Select value before the last value: 6 Select all tensor values: [1 2 3 4 5 6 7]

Selecting/indexing data in tensor is similar to Python list indexing, and NumPy also.

Let's also select data in 2D tensor.

tensor_2d = tf.constant([[1,3],

[3,4],

[4,5]])

print('The first row:', tensor_2d[0,:].numpy())

print('The second column:', tensor_2d[:,1].numpy())

print('The last low:', tensor_2d[-1,:].numpy())

print('The first value in the last row:', tensor_2d[-1,0].numpy())

print('The last value in the last column:', tensor_2d[-1,-1].numpy())

The first row: [1 3] The second column: [3 4 5] The last low: [4 5] The first value in the last row: 4 The last value in the last column: 5

4. 6 Performing Operations on Tensors¶

All numeric operations can be performed on tensor. Let's see few of them.

# Creating example tensors

tensor_1 = tf.constant([1,2,3])

tensor_2 = tf.constant([4,5,6])

# Adding a scalar value to a tensor

print(tensor_1 + 4)

tf.Tensor([5 6 7], shape=(3,), dtype=int32)

# Adding two tensors

print(tensor_1 + tensor_2)

tf.Tensor([5 7 9], shape=(3,), dtype=int32)

# Can also add with tf.add() or tf.math.add()

print(tf.add(tensor_1, tensor_2))

tf.Tensor([5 7 9], shape=(3,), dtype=int32)

# multiplying tensors with tf.multiply()

print(tf.multiply(tensor_1, tensor_2))

tf.Tensor([ 4 10 18], shape=(3,), dtype=int32)

You can learn more at official docs, tf.math() specifically. Almost all maths operations can be done on tensors.

4. 7 Manipulating the Shape of Tensor¶

There are times you would want to reshape a tensor. Here is how to go about it.

print(example_tensor)

tf.Tensor( [[1 3] [3 4] [4 5]], shape=(3, 2), dtype=int32)

Let's reshape the above tensor into (2,3).

tens_reshaped = tf.reshape(example_tensor, [2,3])

print(tens_reshaped)

tf.Tensor( [[1 3 3] [4 4 5]], shape=(2, 3), dtype=int32)

# Also to (6,1)

print(tf.reshape(example_tensor, [6,1]))

tf.Tensor( [[1] [3] [3] [4] [4] [5]], shape=(6, 1), dtype=int32)

# You can also shape a tensor into a list

print(example_tensor.shape.as_list())

[3, 2]

# You can also flatten a tensor

print(tf.reshape(example_tensor, [-1]))

tf.Tensor([1 3 3 4 4 5], shape=(6,), dtype=int32)

There are rules to reshaping a tensor. The new shape has to be resonable. Take an example below, it would create an erroe because there is no way you can reshape the example_tensor into (5,5).

# Running the cell below will create an error

# print(tf.reshape(example_tensor, [5,5]))

That's it for the introduction to TensorFlow and the basics of tensors. 'TensorFlow API revolves around tensors'(CC: Aurelion Geron), and that's why we didn't get straight to doing big things with TF high level API immediately without looking into what makes them possible.