This notebook was created by Jean de Dieu Nyandwi for the love of machine learning community. For any feedback, errors or suggestion, he can be reached on email (johnjw7084 at gmail dot com), Twitter, or LinkedIn.

CNN for Real World Dataset and Image Augmentation¶

1. Intro - Real World Datasets and Image Augmentation¶

Real world image datasets are not always prepared and most of the time, they are not enough in quantity.

Training an effective computer vision system requires a huge amount of images. That is not always the case though. When MIT Technology Review asked Andrew Ng. about the size of the data required to build an AI project, he said:

"Machine learning is so diverse that it’s become really hard to give one-size-fits-all answers. I’ve worked on problems where I had about 200 to 300 million images. I’ve also worked on problems where I had 10 images, and everything in between. When I look at manufacturing applications, I think something like tens or maybe a hundred images for a defect class is not unusual, but there’s very wide variance even within the factory".

We can not emphasize enough the advantages of having both good and enough data although such blend is not always possible.

Given that sometime we may have a handful of images, how can we go about it? Are there ways to expand the small image dataset? And boost the performance of the machine learning model as a result?

With the advancement of machine learning techniques, it has become possible to add a boost to the performance metrics by just expanding the existing dataset. The technique of synthetizing new data(images) from existing data is called data augmentation.

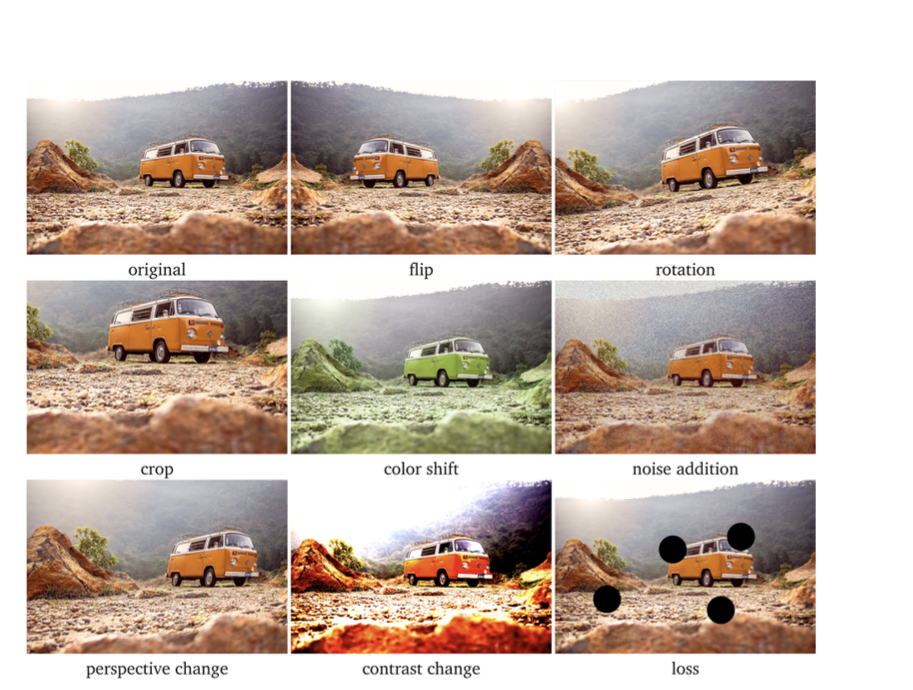

image datasets can be augmented in various ways including:

- Flipping the image, vertically or horizontally.

- Cropping the image.

- Changing contrast and color of the image.

- Adding noise to the data.

- Rotating the image at a given degree.

Below image summarize all possibilities that can be done in image data augmentation.

2. Getting Started: Real World Datasets and Overfitting¶

One of the most challenges in training machine learning models on real world datasets is overfitting.

A model overfits when it memorized the training data due to insufficient training samples, or lack of diversity in training samples. By doing data augmentation, we are increasing the training samples, as well as introducing some diversity in the images.

It's fair to say that data augmentation is the cure to overfitting. To test that, let's train a quick cat and dog classifier. After that, we will augment the images to ovoid overfitting.

Without Data Augmentation: Training Cat and Dog Classifier¶

We are going to see the need of data augmentation by training a cat and dog classifier.

Along the way, we will let the results guide the latter.

2.1 Loading and Preparing Cat and Dog Data¶

Imports¶

import tensorflow as tf

from tensorflow import keras

import os

import zipfile

import matplotlib.pyplot as plt

import numpy as np

Getting the data¶

The version of the data that we are going to use here is a filtered version. Orginally, it contains over 20.000 images.

# Load the data into the workspace

!wget --no-check-certificate \

https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip \

-O /tmp/cats_and_dogs_filtered.zip

--2021-09-18 06:21:48-- https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip Resolving storage.googleapis.com (storage.googleapis.com)... 209.85.147.128, 142.250.125.128, 142.250.136.128, ... Connecting to storage.googleapis.com (storage.googleapis.com)|209.85.147.128|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 68606236 (65M) [application/zip] Saving to: ‘/tmp/cats_and_dogs_filtered.zip’ /tmp/cats_and_dogs_ 100%[===================>] 65.43M 178MB/s in 0.4s 2021-09-18 06:21:48 (178 MB/s) - ‘/tmp/cats_and_dogs_filtered.zip’ saved [68606236/68606236]

# Extract the zip file

zip_dir = '/tmp/cats_and_dogs_filtered.zip'

zip_ref = zipfile.ZipFile(zip_dir, 'r')

zip_ref.extractall('/tmp')

zip_ref.close()

Now that the images are extracted (you can verify that by looking in Colab directory), let's get the training and validation directories from the main directory.

main_dir = '/tmp/cats_and_dogs_filtered'

train_dir = os.path.join(main_dir, 'train')

val_dir = os.path.join(main_dir, 'validation')

We will continue with getting the cats and dogs in the above defined directories.

train_cat_dir = os.path.join(train_dir, 'cats')

train_dog_dir = os.path.join(train_dir, 'dogs')

val_cat_dir = os.path.join(val_dir, 'cats')

val_dog_dir = os.path.join(val_dir, 'dogs')

Now, our directories are quite arranged. Let's see peep into the directories.

os.listdir(main_dir)

['vectorize.py', 'train', 'validation']

os.listdir(train_cat_dir)[:10]

['cat.504.jpg', 'cat.148.jpg', 'cat.560.jpg', 'cat.506.jpg', 'cat.182.jpg', 'cat.300.jpg', 'cat.178.jpg', 'cat.791.jpg', 'cat.260.jpg', 'cat.328.jpg']

Let's walk through the directories and see the number of images in each directory.

for dir, dirname, filename in os.walk(main_dir):

print(f"Found {len(dirname)} directories and {len(filename)} images in {dir}")

Found 2 directories and 1 images in /tmp/cats_and_dogs_filtered Found 2 directories and 0 images in /tmp/cats_and_dogs_filtered/train Found 0 directories and 1000 images in /tmp/cats_and_dogs_filtered/train/dogs Found 0 directories and 1000 images in /tmp/cats_and_dogs_filtered/train/cats Found 2 directories and 0 images in /tmp/cats_and_dogs_filtered/validation Found 0 directories and 500 images in /tmp/cats_and_dogs_filtered/validation/dogs Found 0 directories and 500 images in /tmp/cats_and_dogs_filtered/validation/cats

There are 3000 images, 2000 in training set, 1000 in validation set. Cats and dogs images are evenly splitted.

Let's now generate a dataset to train the model.

Preparing the Dataset¶

from keras.preprocessing.image import ImageDataGenerator

# Rescale the image to values between 0 and 1

train_gen = ImageDataGenerator(rescale=1/255.0)

val_gen = ImageDataGenerator(rescale=1/255.0)

batch_size = 20

image_size = (180,180)

train_data = train_gen.flow_from_directory(train_dir,

batch_size=batch_size,

class_mode='binary',

target_size=image_size)

val_data = val_gen.flow_from_directory(val_dir,

batch_size=batch_size,

class_mode='binary',

target_size=image_size)

Found 2000 images belonging to 2 classes. Found 1000 images belonging to 2 classes.

Before building a model, let's visualize the images. It's always a best practice.

data_for_viz = tf.keras.preprocessing.image_dataset_from_directory(

train_dir,

image_size=(180,180))

Found 2000 files belonging to 2 classes.

def image_viz(dataset):

plt.figure(figsize=(12, 8))

index = 0

for image, label in dataset.take(12):

index +=1

ax = plt.subplot(4, 4, index)

plt.imshow(image[index].numpy().astype("uint8"))

plt.title(int(label[index]))

plt.axis("off")

image_viz(data_for_viz)

2.2 Building, Compiling and Training a Model¶

def classifier():

model = tf.keras.models.Sequential([

# First convolution and pooling layer

tf.keras.layers.Conv2D(32, (3,3), activation='relu', input_shape=(180, 180, 3)),

tf.keras.layers.MaxPooling2D(2, 2),

# Second convolution and pooling layer

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# Third convolution and pooling layer

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# Flattening layer for converting the feature maps into 1D column vector

tf.keras.layers.Flatten(),

# Fully connected layers

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

# Compiling the model: Specifying optimizer, loss and metric to track during training

model.compile(

optimizer=tf.keras.optimizers.RMSprop(),

loss='binary_crossentropy',

metrics=['accuracy']

)

return model

# Training a model

model = classifier()

train_steps = 2000 / batch_size

val_steps = 1000 / batch_size

history = model.fit(train_data,

validation_data=val_data,

epochs=50,

steps_per_epoch=train_steps,

validation_steps=val_steps)

Epoch 1/50 100/100 [==============================] - 45s 121ms/step - loss: 0.8243 - accuracy: 0.5435 - val_loss: 0.7136 - val_accuracy: 0.5000 Epoch 2/50 100/100 [==============================] - 12s 118ms/step - loss: 0.6378 - accuracy: 0.6455 - val_loss: 0.5870 - val_accuracy: 0.6690 Epoch 3/50 100/100 [==============================] - 12s 118ms/step - loss: 0.5446 - accuracy: 0.7245 - val_loss: 0.5848 - val_accuracy: 0.6990 Epoch 4/50 100/100 [==============================] - 12s 117ms/step - loss: 0.4387 - accuracy: 0.7955 - val_loss: 0.5780 - val_accuracy: 0.7310 Epoch 5/50 100/100 [==============================] - 12s 117ms/step - loss: 0.3505 - accuracy: 0.8525 - val_loss: 0.5507 - val_accuracy: 0.7550 Epoch 6/50 100/100 [==============================] - 12s 119ms/step - loss: 0.2380 - accuracy: 0.8940 - val_loss: 0.7112 - val_accuracy: 0.7190 Epoch 7/50 100/100 [==============================] - 12s 118ms/step - loss: 0.1427 - accuracy: 0.9425 - val_loss: 0.8400 - val_accuracy: 0.7560 Epoch 8/50 100/100 [==============================] - 12s 118ms/step - loss: 0.1045 - accuracy: 0.9620 - val_loss: 0.9308 - val_accuracy: 0.7460 Epoch 9/50 100/100 [==============================] - 12s 116ms/step - loss: 0.0593 - accuracy: 0.9785 - val_loss: 1.2529 - val_accuracy: 0.7140 Epoch 10/50 100/100 [==============================] - 12s 119ms/step - loss: 0.0575 - accuracy: 0.9815 - val_loss: 1.5428 - val_accuracy: 0.7390 Epoch 11/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0421 - accuracy: 0.9870 - val_loss: 1.9313 - val_accuracy: 0.7350 Epoch 12/50 100/100 [==============================] - 12s 117ms/step - loss: 0.0349 - accuracy: 0.9875 - val_loss: 1.9470 - val_accuracy: 0.7300 Epoch 13/50 100/100 [==============================] - 12s 117ms/step - loss: 0.0599 - accuracy: 0.9875 - val_loss: 2.0286 - val_accuracy: 0.7350 Epoch 14/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0267 - accuracy: 0.9925 - val_loss: 2.0821 - val_accuracy: 0.7330 Epoch 15/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0440 - accuracy: 0.9920 - val_loss: 2.3201 - val_accuracy: 0.7280 Epoch 16/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0649 - accuracy: 0.9885 - val_loss: 2.2570 - val_accuracy: 0.7180 Epoch 17/50 100/100 [==============================] - 12s 121ms/step - loss: 0.0521 - accuracy: 0.9930 - val_loss: 2.5457 - val_accuracy: 0.7230 Epoch 18/50 100/100 [==============================] - 12s 119ms/step - loss: 0.0297 - accuracy: 0.9950 - val_loss: 2.4022 - val_accuracy: 0.7310 Epoch 19/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0482 - accuracy: 0.9900 - val_loss: 2.2503 - val_accuracy: 0.7210 Epoch 20/50 100/100 [==============================] - 12s 120ms/step - loss: 0.0227 - accuracy: 0.9945 - val_loss: 2.8755 - val_accuracy: 0.7150 Epoch 21/50 100/100 [==============================] - 12s 117ms/step - loss: 0.0265 - accuracy: 0.9950 - val_loss: 2.5832 - val_accuracy: 0.7010 Epoch 22/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0241 - accuracy: 0.9930 - val_loss: 2.5352 - val_accuracy: 0.7140 Epoch 23/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0132 - accuracy: 0.9980 - val_loss: 3.2614 - val_accuracy: 0.7200 Epoch 24/50 100/100 [==============================] - 12s 117ms/step - loss: 0.0311 - accuracy: 0.9945 - val_loss: 3.4943 - val_accuracy: 0.7230 Epoch 25/50 100/100 [==============================] - 12s 116ms/step - loss: 0.0894 - accuracy: 0.9910 - val_loss: 3.6839 - val_accuracy: 0.7070 Epoch 26/50 100/100 [==============================] - 12s 115ms/step - loss: 0.0231 - accuracy: 0.9940 - val_loss: 3.8832 - val_accuracy: 0.6890 Epoch 27/50 100/100 [==============================] - 12s 117ms/step - loss: 1.7756e-04 - accuracy: 1.0000 - val_loss: 4.3875 - val_accuracy: 0.7130 Epoch 28/50 100/100 [==============================] - 11s 115ms/step - loss: 0.0113 - accuracy: 0.9960 - val_loss: 4.3880 - val_accuracy: 0.7220 Epoch 29/50 100/100 [==============================] - 12s 117ms/step - loss: 0.0622 - accuracy: 0.9955 - val_loss: 3.6404 - val_accuracy: 0.7020 Epoch 30/50 100/100 [==============================] - 12s 117ms/step - loss: 0.0082 - accuracy: 0.9970 - val_loss: 6.3984 - val_accuracy: 0.6690 Epoch 31/50 100/100 [==============================] - 12s 116ms/step - loss: 0.0135 - accuracy: 0.9965 - val_loss: 4.5262 - val_accuracy: 0.7060 Epoch 32/50 100/100 [==============================] - 12s 117ms/step - loss: 0.0279 - accuracy: 0.9970 - val_loss: 4.9610 - val_accuracy: 0.7290 Epoch 33/50 100/100 [==============================] - 14s 136ms/step - loss: 0.0084 - accuracy: 0.9975 - val_loss: 4.2445 - val_accuracy: 0.7160 Epoch 34/50 100/100 [==============================] - 12s 120ms/step - loss: 0.0608 - accuracy: 0.9915 - val_loss: 5.2009 - val_accuracy: 0.7000 Epoch 35/50 100/100 [==============================] - 12s 119ms/step - loss: 0.0191 - accuracy: 0.9960 - val_loss: 4.1152 - val_accuracy: 0.7220 Epoch 36/50 100/100 [==============================] - 12s 117ms/step - loss: 0.0161 - accuracy: 0.9970 - val_loss: 5.3480 - val_accuracy: 0.7060 Epoch 37/50 100/100 [==============================] - 12s 119ms/step - loss: 0.0276 - accuracy: 0.9950 - val_loss: 4.9586 - val_accuracy: 0.7090 Epoch 38/50 100/100 [==============================] - 12s 119ms/step - loss: 0.0266 - accuracy: 0.9960 - val_loss: 4.8451 - val_accuracy: 0.7210 Epoch 39/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0117 - accuracy: 0.9985 - val_loss: 4.7765 - val_accuracy: 0.7290 Epoch 40/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0169 - accuracy: 0.9985 - val_loss: 5.0485 - val_accuracy: 0.7170 Epoch 41/50 100/100 [==============================] - 12s 118ms/step - loss: 3.9937e-07 - accuracy: 1.0000 - val_loss: 5.2801 - val_accuracy: 0.7220 Epoch 42/50 100/100 [==============================] - 12s 119ms/step - loss: 1.4917e-08 - accuracy: 1.0000 - val_loss: 5.5378 - val_accuracy: 0.7260 Epoch 43/50 100/100 [==============================] - 12s 119ms/step - loss: 1.6792e-08 - accuracy: 1.0000 - val_loss: 6.1406 - val_accuracy: 0.7250 Epoch 44/50 100/100 [==============================] - 12s 117ms/step - loss: 0.1762 - accuracy: 0.9930 - val_loss: 8.8898 - val_accuracy: 0.7230 Epoch 45/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0034 - accuracy: 0.9995 - val_loss: 6.9045 - val_accuracy: 0.7220 Epoch 46/50 100/100 [==============================] - 12s 119ms/step - loss: 0.0520 - accuracy: 0.9960 - val_loss: 6.7058 - val_accuracy: 0.7110 Epoch 47/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0240 - accuracy: 0.9960 - val_loss: 6.5657 - val_accuracy: 0.7270 Epoch 48/50 100/100 [==============================] - 12s 119ms/step - loss: 0.0397 - accuracy: 0.9950 - val_loss: 5.1782 - val_accuracy: 0.7260 Epoch 49/50 100/100 [==============================] - 12s 118ms/step - loss: 2.9981e-07 - accuracy: 1.0000 - val_loss: 6.1723 - val_accuracy: 0.7270 Epoch 50/50 100/100 [==============================] - 12s 118ms/step - loss: 0.0144 - accuracy: 0.9975 - val_loss: 5.6709 - val_accuracy: 0.7110

2.3 Visualizing the Model Results¶

# function to plot accuracy and loss

def plot_acc_loss(acc, val_acc, loss, val_loss, epochs):

plt.figure(figsize=(10,5))

plt.plot(epochs, acc, 'r', label='Training Accuracy')

plt.plot(epochs, val_acc, 'g', label='Validation Accuracy')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(loc=0)

# Create a new figure with plt.figure()

plt.figure()

plt.figure(figsize=(10,5))

plt.plot(epochs, loss, 'b', label='Training Loss')

plt.plot(epochs, val_loss, 'y', label='Validation Loss')

plt.title('Training and Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc=0)

plt.show()

model_history = history.history

acc = model_history['accuracy']

val_acc = model_history['val_accuracy']

loss = model_history['loss']

val_loss = model_history['val_loss']

epochs = history.epoch

plot_acc_loss(acc, val_acc, loss, val_loss, epochs)

<Figure size 432x288 with 0 Axes>

Clearly, the our classifier overfitted. If you can look at the plots above, there is so much gap between the training and validation accuracy/loss.

The classifier is overly confident at recognizing training images, but not so good when evaluated on the validation images.

This goes to show that data augmentation is very useful technique. By just expanding the images, and introducing different image scenes, overfitting can potentially be handled. That is what we are going to do iin the next section.

3. Image Augmentation with ImageDataGenerator¶

ImageDataGenerator is a powerful Keras image processing functionality used to augment images. It is a part of image data processing functions.

The single most advantage of ImageDataGenerator is that it allows you to augment images in realtime, as you load them from a directory for example.

The orginal directory of the data is not affected at all. The image will be loaded & augmented at the same time, while not affecting the orginal directory.

3.1 Loading the Data Again¶

# Download the data into the workspace

!wget --no-check-certificate \

https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip \

-O /tmp/cats_and_dogs_filtered.zip

# Extract the zip file

zip_dir = '/tmp/cats_and_dogs_filtered.zip'

zip_ref = zipfile.ZipFile(zip_dir, 'r')

zip_ref.extractall('/tmp')

zip_ref.close()

# Get training and val directories

main_dir = '/tmp/cats_and_dogs_filtered'

train_dir = os.path.join(main_dir, 'train')

val_dir = os.path.join(main_dir, 'validation')

train_cat_dir = os.path.join(train_dir, 'cats')

train_dog_dir = os.path.join(train_dir, 'dogs')

val_cat_dir = os.path.join(val_dir, 'cats')

val_dog_dir = os.path.join(val_dir, 'dogs')

--2021-09-18 06:32:33-- https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip Resolving storage.googleapis.com (storage.googleapis.com)... 74.125.70.128, 74.125.201.128, 74.125.202.128, ... Connecting to storage.googleapis.com (storage.googleapis.com)|74.125.70.128|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 68606236 (65M) [application/zip] Saving to: ‘/tmp/cats_and_dogs_filtered.zip’ /tmp/cats_and_dogs_ 100%[===================>] 65.43M 169MB/s in 0.4s 2021-09-18 06:32:34 (169 MB/s) - ‘/tmp/cats_and_dogs_filtered.zip’ saved [68606236/68606236]

3.2 Apply Data Augmentation¶

We are going to use ImageDataGenerator to generate augmented images.

Below are some of the options available in ImageDataGenerator and their explainations.

train_imagenerator = ImageDataGenerator(

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')rotation_range is a value in degrees (0–180) to randomly rotate images.

width_shift and height_shift are ranges of fraction of total width or height within which to translate pictures, either vertically or horizontally.

shear_range is for applying shearing randomly.

zoom_range is for zooming pictures randomly.

horizontal_flip is for flipping half of the images horizontally. There is also

vertical_flipoption.fill_mode is for completing newly created pixels, which can appear after a rotation or a width/height shift.

See the documentation, it is an interesting read, and there are more preprocessing functions that you might need in your future projects.

Let's see this in practice! We will start by creating train_imagenerator which is an image generator for training set.

# Creating training image data generator

from tensorflow.keras.preprocessing.image import ImageDataGenerator

train_imagenerator = ImageDataGenerator(

rescale=1/255.,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest'

)

We will also create val_imagenerator, but different to training generator, there are no augmentation. It's only rescaling the images pixels to values between 0 and 1. Images pixels are normally between 0 and 255. Rescaling the values improve the performance of the neural network and reduce training time as well.

# Validation image generator

val_imagenerator = ImageDataGenerator(rescale=1/255.)

After creating train and validation generators, let's use image_dataset_from_directory function to generate a TensorFlow dataset from images files located in our two directories.

Below is how training and validation directories are structured:

cats_dogs_filtered/

..train/

....cats/

......cat.0.jpg

......cat.1.jpg

......cat.2.jpg

....dogs/

......dog.0.jpg

......dog.1.jpg

......dog.2.jpg

..validation/

....cats/

......cat.0.jpg

......cat.1.jpg

....dogs/

......dog.0.jpg

......dog.1.jpg# Load training images in batches of 20 while applying aumgmentation

batch_size = 20

target_size = (180,180)

train_generator = train_imagenerator.flow_from_directory(

train_dir, #parent directory must be specified

target_size = target_size, # All images will be resized to (180,180)

batch_size=batch_size,

class_mode='binary' # since we need binary labels(0,1) and we will use binary_crossentropy

)

Found 2000 images belonging to 2 classes.

val_generator = val_imagenerator.flow_from_directory(

val_dir, #parent directory must be specified

target_size = target_size, # All images will be resized to (180,180)

batch_size=batch_size,

class_mode='binary' # since we need binary labels(0,1) and we will use binary_crossentropy

)

Found 1000 images belonging to 2 classes.

Another great advantage of ImageDataGenerator is that it generates the labels of the images based off their folders. During the model training, we won't need to specify the labels.

We are ready to train our machine learning model now, but before we could try to visualize the augmented images.

3.3 Visualizing Augmented Images¶

# Get images in batch of 20

augmented_image, label = train_generator.next()

plt.figure(figsize=(12,8))

for i in range(6):

ax = plt.subplot(2, 3, i + 1)

plt.imshow(augmented_image[i])

plt.title(int(label[i]))

plt.axis("off")

It may be hard to spot since we are not comparing them with non augmented images, but if you can observe well, some images are zoomed in, rotated, and flipped horizantally.

Below images are not augmented. You can see that no zoom were applied for example.

non_augmented_image, label = train_data.next()

plt.figure(figsize=(12,8))

for i in range(6):

ax = plt.subplot(2, 3, i + 1)

plt.imshow(non_augmented_image[i])

plt.title(int(label[i]))

plt.axis("off")

Now that we have visualized the augmented images, let's retrain the model on augmented images.

3.4 Retraining a Model on Augmented Images¶

You noticed that during training, we didn't have to provide the labels. ImageDataGenerator took care of it. As the images are loaded from their directories(cats, dogs), they are augmented and labelled at the same time.

Another thing we can shed light on is the batch size. We have loaded our images in batch size of 20. Usually, the default batch size is 32. The value of the batch size only affect training time. The larger the size, the faster the training, and the smaller the size, the slower the training.

The only issue with the large batch size is that it would requires many steps per epoch in order to give optimal model performance. I have merely used 20 based off the number of images we have in both sets, to facilitate the computation and steps per epochs. But a rule of thumb is to always start with 32. There is this great paper that talks about that: Practical recommendations for gradient-based training of deep architectures - Yoshua Bengio.

To be able to see what the difference data augmentation will make, we will use the same model as before. Let's call it.

model_2 = classifier()

batch_size = 20

train_steps = 2000/batch_size

val_steps = 1000/batch_size

history_2 = model_2.fit(

train_generator,

steps_per_epoch=train_steps,

epochs=100,

validation_data=val_generator,

validation_steps=val_steps)

Epoch 1/100 100/100 [==============================] - 27s 262ms/step - loss: 0.9125 - accuracy: 0.5150 - val_loss: 0.6847 - val_accuracy: 0.5680 Epoch 2/100 100/100 [==============================] - 26s 260ms/step - loss: 0.7163 - accuracy: 0.5620 - val_loss: 0.7020 - val_accuracy: 0.5400 Epoch 3/100 100/100 [==============================] - 26s 260ms/step - loss: 0.6724 - accuracy: 0.6190 - val_loss: 0.6039 - val_accuracy: 0.6670 Epoch 4/100 100/100 [==============================] - 26s 261ms/step - loss: 0.6502 - accuracy: 0.6325 - val_loss: 0.6332 - val_accuracy: 0.6580 Epoch 5/100 100/100 [==============================] - 26s 261ms/step - loss: 0.6322 - accuracy: 0.6530 - val_loss: 0.5951 - val_accuracy: 0.6880 Epoch 6/100 100/100 [==============================] - 26s 261ms/step - loss: 0.6466 - accuracy: 0.6570 - val_loss: 0.5699 - val_accuracy: 0.6920 Epoch 7/100 100/100 [==============================] - 26s 259ms/step - loss: 0.6135 - accuracy: 0.6610 - val_loss: 0.5892 - val_accuracy: 0.6930 Epoch 8/100 100/100 [==============================] - 26s 260ms/step - loss: 0.6110 - accuracy: 0.6825 - val_loss: 0.7350 - val_accuracy: 0.5930 Epoch 9/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5980 - accuracy: 0.6710 - val_loss: 0.5578 - val_accuracy: 0.7080 Epoch 10/100 100/100 [==============================] - 26s 260ms/step - loss: 0.6059 - accuracy: 0.6830 - val_loss: 0.5752 - val_accuracy: 0.6930 Epoch 11/100 100/100 [==============================] - 26s 259ms/step - loss: 0.5952 - accuracy: 0.6975 - val_loss: 0.5849 - val_accuracy: 0.6880 Epoch 12/100 100/100 [==============================] - 26s 259ms/step - loss: 0.5795 - accuracy: 0.7035 - val_loss: 0.5498 - val_accuracy: 0.7100 Epoch 13/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5825 - accuracy: 0.6955 - val_loss: 0.5590 - val_accuracy: 0.7330 Epoch 14/100 100/100 [==============================] - 26s 259ms/step - loss: 0.5684 - accuracy: 0.6995 - val_loss: 0.5590 - val_accuracy: 0.7390 Epoch 15/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5707 - accuracy: 0.7145 - val_loss: 0.5559 - val_accuracy: 0.7290 Epoch 16/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5704 - accuracy: 0.7110 - val_loss: 0.5219 - val_accuracy: 0.7530 Epoch 17/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5568 - accuracy: 0.7220 - val_loss: 0.5282 - val_accuracy: 0.7450 Epoch 18/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5494 - accuracy: 0.7295 - val_loss: 0.5171 - val_accuracy: 0.7490 Epoch 19/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5493 - accuracy: 0.7305 - val_loss: 0.5150 - val_accuracy: 0.7580 Epoch 20/100 100/100 [==============================] - 26s 261ms/step - loss: 0.5572 - accuracy: 0.7180 - val_loss: 0.5092 - val_accuracy: 0.7490 Epoch 21/100 100/100 [==============================] - 26s 261ms/step - loss: 0.5455 - accuracy: 0.7315 - val_loss: 1.3531 - val_accuracy: 0.5490 Epoch 22/100 100/100 [==============================] - 26s 262ms/step - loss: 0.5559 - accuracy: 0.7190 - val_loss: 0.5160 - val_accuracy: 0.7360 Epoch 23/100 100/100 [==============================] - 26s 261ms/step - loss: 0.5346 - accuracy: 0.7480 - val_loss: 0.4923 - val_accuracy: 0.7530 Epoch 24/100 100/100 [==============================] - 26s 261ms/step - loss: 0.5278 - accuracy: 0.7465 - val_loss: 0.6548 - val_accuracy: 0.7320 Epoch 25/100 100/100 [==============================] - 26s 262ms/step - loss: 0.5528 - accuracy: 0.7300 - val_loss: 0.4998 - val_accuracy: 0.7710 Epoch 26/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5262 - accuracy: 0.7380 - val_loss: 0.4864 - val_accuracy: 0.7750 Epoch 27/100 100/100 [==============================] - 26s 261ms/step - loss: 0.5367 - accuracy: 0.7320 - val_loss: 0.5042 - val_accuracy: 0.7560 Epoch 28/100 100/100 [==============================] - 26s 262ms/step - loss: 0.5273 - accuracy: 0.7375 - val_loss: 0.5322 - val_accuracy: 0.7670 Epoch 29/100 100/100 [==============================] - 26s 261ms/step - loss: 0.5140 - accuracy: 0.7510 - val_loss: 0.4883 - val_accuracy: 0.7650 Epoch 30/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5395 - accuracy: 0.7450 - val_loss: 0.5540 - val_accuracy: 0.7410 Epoch 31/100 100/100 [==============================] - 26s 262ms/step - loss: 0.5169 - accuracy: 0.7460 - val_loss: 0.5006 - val_accuracy: 0.7610 Epoch 32/100 100/100 [==============================] - 26s 260ms/step - loss: 0.5229 - accuracy: 0.7455 - val_loss: 0.5133 - val_accuracy: 0.7400 Epoch 33/100 100/100 [==============================] - 26s 262ms/step - loss: 0.5317 - accuracy: 0.7410 - val_loss: 0.5122 - val_accuracy: 0.7460 Epoch 34/100 100/100 [==============================] - 27s 268ms/step - loss: 0.5261 - accuracy: 0.7400 - val_loss: 0.4857 - val_accuracy: 0.7630 Epoch 35/100 100/100 [==============================] - 26s 264ms/step - loss: 0.5165 - accuracy: 0.7560 - val_loss: 0.4647 - val_accuracy: 0.7790 Epoch 36/100 100/100 [==============================] - 26s 262ms/step - loss: 0.5171 - accuracy: 0.7510 - val_loss: 0.4880 - val_accuracy: 0.7550 Epoch 37/100 100/100 [==============================] - 26s 259ms/step - loss: 0.5121 - accuracy: 0.7465 - val_loss: 0.4775 - val_accuracy: 0.7880 Epoch 38/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4949 - accuracy: 0.7685 - val_loss: 0.4815 - val_accuracy: 0.7780 Epoch 39/100 100/100 [==============================] - 26s 262ms/step - loss: 0.5242 - accuracy: 0.7560 - val_loss: 0.5349 - val_accuracy: 0.7260 Epoch 40/100 100/100 [==============================] - 27s 266ms/step - loss: 0.4927 - accuracy: 0.7655 - val_loss: 0.5462 - val_accuracy: 0.7160 Epoch 41/100 100/100 [==============================] - 26s 265ms/step - loss: 0.5141 - accuracy: 0.7600 - val_loss: 0.5143 - val_accuracy: 0.7670 Epoch 42/100 100/100 [==============================] - 26s 264ms/step - loss: 0.4941 - accuracy: 0.7575 - val_loss: 0.5477 - val_accuracy: 0.7700 Epoch 43/100 100/100 [==============================] - 26s 263ms/step - loss: 0.4901 - accuracy: 0.7760 - val_loss: 0.5021 - val_accuracy: 0.7970 Epoch 44/100 100/100 [==============================] - 26s 261ms/step - loss: 0.5001 - accuracy: 0.7560 - val_loss: 0.5141 - val_accuracy: 0.7810 Epoch 45/100 100/100 [==============================] - 26s 264ms/step - loss: 0.5046 - accuracy: 0.7705 - val_loss: 0.4855 - val_accuracy: 0.7600 Epoch 46/100 100/100 [==============================] - 26s 264ms/step - loss: 0.4864 - accuracy: 0.7770 - val_loss: 0.4695 - val_accuracy: 0.7880 Epoch 47/100 100/100 [==============================] - 26s 264ms/step - loss: 0.4881 - accuracy: 0.7765 - val_loss: 0.5015 - val_accuracy: 0.7590 Epoch 48/100 100/100 [==============================] - 26s 262ms/step - loss: 0.4922 - accuracy: 0.7640 - val_loss: 0.5486 - val_accuracy: 0.7590 Epoch 49/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4942 - accuracy: 0.7670 - val_loss: 0.4959 - val_accuracy: 0.7700 Epoch 50/100 100/100 [==============================] - 26s 261ms/step - loss: 0.5053 - accuracy: 0.7575 - val_loss: 0.6068 - val_accuracy: 0.7170 Epoch 51/100 100/100 [==============================] - 26s 263ms/step - loss: 0.4978 - accuracy: 0.7710 - val_loss: 0.4660 - val_accuracy: 0.7860 Epoch 52/100 100/100 [==============================] - 26s 261ms/step - loss: 0.4995 - accuracy: 0.7675 - val_loss: 0.7096 - val_accuracy: 0.6820 Epoch 53/100 100/100 [==============================] - 26s 262ms/step - loss: 0.5044 - accuracy: 0.7650 - val_loss: 0.4931 - val_accuracy: 0.7730 Epoch 54/100 100/100 [==============================] - 26s 262ms/step - loss: 0.4918 - accuracy: 0.7785 - val_loss: 0.4823 - val_accuracy: 0.7650 Epoch 55/100 100/100 [==============================] - 26s 262ms/step - loss: 0.4842 - accuracy: 0.7690 - val_loss: 0.6578 - val_accuracy: 0.7260 Epoch 56/100 100/100 [==============================] - 26s 263ms/step - loss: 0.4991 - accuracy: 0.7630 - val_loss: 0.4485 - val_accuracy: 0.7990 Epoch 57/100 100/100 [==============================] - 26s 263ms/step - loss: 0.4996 - accuracy: 0.7705 - val_loss: 0.6781 - val_accuracy: 0.7250 Epoch 58/100 100/100 [==============================] - 26s 262ms/step - loss: 0.4799 - accuracy: 0.7820 - val_loss: 0.4580 - val_accuracy: 0.7920 Epoch 59/100 100/100 [==============================] - 26s 263ms/step - loss: 0.4987 - accuracy: 0.7590 - val_loss: 0.4679 - val_accuracy: 0.7710 Epoch 60/100 100/100 [==============================] - 26s 262ms/step - loss: 0.4842 - accuracy: 0.7825 - val_loss: 0.6739 - val_accuracy: 0.6980 Epoch 61/100 100/100 [==============================] - 26s 262ms/step - loss: 0.4849 - accuracy: 0.7745 - val_loss: 0.4635 - val_accuracy: 0.7890 Epoch 62/100 100/100 [==============================] - 26s 264ms/step - loss: 0.4711 - accuracy: 0.7830 - val_loss: 0.4571 - val_accuracy: 0.7850 Epoch 63/100 100/100 [==============================] - 26s 263ms/step - loss: 0.4866 - accuracy: 0.7630 - val_loss: 0.4490 - val_accuracy: 0.7980 Epoch 64/100 100/100 [==============================] - 26s 264ms/step - loss: 0.4751 - accuracy: 0.7800 - val_loss: 0.4483 - val_accuracy: 0.8010 Epoch 65/100 100/100 [==============================] - 27s 268ms/step - loss: 0.4659 - accuracy: 0.7815 - val_loss: 0.4698 - val_accuracy: 0.7900 Epoch 66/100 100/100 [==============================] - 27s 267ms/step - loss: 0.4796 - accuracy: 0.7715 - val_loss: 0.4577 - val_accuracy: 0.7840 Epoch 67/100 100/100 [==============================] - 26s 264ms/step - loss: 0.4696 - accuracy: 0.7845 - val_loss: 0.4937 - val_accuracy: 0.7580 Epoch 68/100 100/100 [==============================] - 26s 262ms/step - loss: 0.4880 - accuracy: 0.7780 - val_loss: 0.8981 - val_accuracy: 0.6680 Epoch 69/100 100/100 [==============================] - 26s 261ms/step - loss: 0.4778 - accuracy: 0.7730 - val_loss: 0.4467 - val_accuracy: 0.7980 Epoch 70/100 100/100 [==============================] - 26s 260ms/step - loss: 0.4663 - accuracy: 0.7800 - val_loss: 0.4448 - val_accuracy: 0.8000 Epoch 71/100 100/100 [==============================] - 26s 260ms/step - loss: 0.4749 - accuracy: 0.7795 - val_loss: 0.4991 - val_accuracy: 0.7830 Epoch 72/100 100/100 [==============================] - 26s 261ms/step - loss: 0.4772 - accuracy: 0.7805 - val_loss: 0.4556 - val_accuracy: 0.7680 Epoch 73/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4586 - accuracy: 0.7780 - val_loss: 0.6288 - val_accuracy: 0.7820 Epoch 74/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4761 - accuracy: 0.7830 - val_loss: 0.4684 - val_accuracy: 0.7880 Epoch 75/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4898 - accuracy: 0.7905 - val_loss: 0.5107 - val_accuracy: 0.7830 Epoch 76/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4798 - accuracy: 0.7770 - val_loss: 0.5129 - val_accuracy: 0.7750 Epoch 77/100 100/100 [==============================] - 26s 258ms/step - loss: 0.4637 - accuracy: 0.7780 - val_loss: 0.5715 - val_accuracy: 0.7570 Epoch 78/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4799 - accuracy: 0.7825 - val_loss: 0.4657 - val_accuracy: 0.7810 Epoch 79/100 100/100 [==============================] - 26s 260ms/step - loss: 0.4726 - accuracy: 0.7975 - val_loss: 0.4772 - val_accuracy: 0.7810 Epoch 80/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4624 - accuracy: 0.7915 - val_loss: 0.4837 - val_accuracy: 0.7640 Epoch 81/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4544 - accuracy: 0.7950 - val_loss: 0.4874 - val_accuracy: 0.7760 Epoch 82/100 100/100 [==============================] - 26s 256ms/step - loss: 0.4725 - accuracy: 0.7995 - val_loss: 0.4473 - val_accuracy: 0.8050 Epoch 83/100 100/100 [==============================] - 26s 256ms/step - loss: 0.4580 - accuracy: 0.7885 - val_loss: 0.6340 - val_accuracy: 0.7330 Epoch 84/100 100/100 [==============================] - 26s 257ms/step - loss: 0.4829 - accuracy: 0.7770 - val_loss: 0.9491 - val_accuracy: 0.6530 Epoch 85/100 100/100 [==============================] - 26s 255ms/step - loss: 0.4757 - accuracy: 0.7720 - val_loss: 0.5391 - val_accuracy: 0.7240 Epoch 86/100 100/100 [==============================] - 25s 255ms/step - loss: 0.4659 - accuracy: 0.7815 - val_loss: 0.4597 - val_accuracy: 0.7820 Epoch 87/100 100/100 [==============================] - 26s 256ms/step - loss: 0.4700 - accuracy: 0.7940 - val_loss: 0.5405 - val_accuracy: 0.7680 Epoch 88/100 100/100 [==============================] - 26s 257ms/step - loss: 0.4764 - accuracy: 0.7755 - val_loss: 0.4748 - val_accuracy: 0.7810 Epoch 89/100 100/100 [==============================] - 26s 257ms/step - loss: 0.4651 - accuracy: 0.8025 - val_loss: 0.5026 - val_accuracy: 0.8060 Epoch 90/100 100/100 [==============================] - 26s 257ms/step - loss: 0.4647 - accuracy: 0.7745 - val_loss: 0.4729 - val_accuracy: 0.8000 Epoch 91/100 100/100 [==============================] - 26s 257ms/step - loss: 0.4742 - accuracy: 0.7975 - val_loss: 0.4547 - val_accuracy: 0.8030 Epoch 92/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4620 - accuracy: 0.7840 - val_loss: 0.5222 - val_accuracy: 0.7700 Epoch 93/100 100/100 [==============================] - 26s 258ms/step - loss: 0.4495 - accuracy: 0.7955 - val_loss: 0.4756 - val_accuracy: 0.8070 Epoch 94/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4627 - accuracy: 0.7865 - val_loss: 0.4523 - val_accuracy: 0.7990 Epoch 95/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4650 - accuracy: 0.7850 - val_loss: 0.5393 - val_accuracy: 0.7630 Epoch 96/100 100/100 [==============================] - 26s 258ms/step - loss: 0.4628 - accuracy: 0.7860 - val_loss: 0.4386 - val_accuracy: 0.8020 Epoch 97/100 100/100 [==============================] - 26s 258ms/step - loss: 0.4665 - accuracy: 0.7955 - val_loss: 0.4350 - val_accuracy: 0.8090 Epoch 98/100 100/100 [==============================] - 26s 259ms/step - loss: 0.4646 - accuracy: 0.7860 - val_loss: 0.4551 - val_accuracy: 0.7960 Epoch 99/100 100/100 [==============================] - 26s 261ms/step - loss: 0.4780 - accuracy: 0.7760 - val_loss: 0.5404 - val_accuracy: 0.7260 Epoch 100/100 100/100 [==============================] - 26s 258ms/step - loss: 0.4772 - accuracy: 0.7750 - val_loss: 0.4480 - val_accuracy: 0.7890

3.5 Visualizing The Model Results¶

model_history_2 = history_2.history

acc = model_history_2['accuracy']

val_acc = model_history_2['val_accuracy']

loss = model_history_2['loss']

val_loss = model_history_2['val_loss']

epochs = history_2.epoch

plot_acc_loss(acc, val_acc, loss, val_loss, epochs)

<Figure size 432x288 with 0 Axes>

This is not excellent, but it's alot better than the results we had without data augmentation. you remember that our model was overfitting, but now although it's not smooth, there is a big improvement.

How to improve the results? One might try to tweak layers and filters. Machine Learning is very experimental. It's rare that the first model will work well. The result is a function of time and experimentation.

So, in this case, we can try pretrained models. Pretrained models are open source models that are built by other engineers(often researchers) and we can use them instead of building a network from scratch.

The technique of reusing a pretrained model into a given(similar) task is called transfer learning. Although this will be covered in the next notebook, let's give it a shot right away.

3.6 Further Improvements: Using Pretrained Models¶

Pretrained models works so well for many problems, without the need of building models from scratch.

Imagine how far you get by standing on the shoulder of the giant! By using powerful models trained on big datasets, the results are pretty impressive.

Let's practice that. For more about pretrained models, check the next notebook.

You can find available pretrained models in Keras on Keras Applications.

pretrained_base_model = keras.applications.InceptionResNetV2(

weights='imagenet',

include_top=False, # Drop imagenet classifier on the top

input_shape=(180,180,3)

)

Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/inception_resnet_v2/inception_resnet_v2_weights_tf_dim_ordering_tf_kernels_notop.h5 219062272/219055592 [==============================] - 2s 0us/step 219070464/219055592 [==============================] - 2s 0us/step

We then freeze the pretrained base model to avoid retraining the bottom layers.

for layer in pretrained_base_model.layers:

layer.trainable = False

Let's see the summary of the base model.

pretrained_base_model.summary()

Model: "inception_resnet_v2"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 180, 180, 3) 0

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 89, 89, 32) 864 input_1[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 89, 89, 32) 96 conv2d_9[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 89, 89, 32) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 87, 87, 32) 9216 activation[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 87, 87, 32) 96 conv2d_10[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 87, 87, 32) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 87, 87, 64) 18432 activation_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 87, 87, 64) 192 conv2d_11[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 87, 87, 64) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

max_pooling2d_9 (MaxPooling2D) (None, 43, 43, 64) 0 activation_2[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 43, 43, 80) 5120 max_pooling2d_9[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 43, 43, 80) 240 conv2d_12[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 43, 43, 80) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 41, 41, 192) 138240 activation_3[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 41, 41, 192) 576 conv2d_13[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 41, 41, 192) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

max_pooling2d_10 (MaxPooling2D) (None, 20, 20, 192) 0 activation_4[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 20, 20, 64) 12288 max_pooling2d_10[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 20, 20, 64) 192 conv2d_17[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 20, 20, 64) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 20, 20, 48) 9216 max_pooling2d_10[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 20, 20, 96) 55296 activation_8[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 20, 20, 48) 144 conv2d_15[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 20, 20, 96) 288 conv2d_18[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 20, 20, 48) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 20, 20, 96) 0 batch_normalization_9[0][0]

__________________________________________________________________________________________________

average_pooling2d (AveragePooli (None, 20, 20, 192) 0 max_pooling2d_10[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 20, 20, 96) 18432 max_pooling2d_10[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 20, 20, 64) 76800 activation_6[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 20, 20, 96) 82944 activation_9[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 20, 20, 64) 12288 average_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 20, 20, 96) 288 conv2d_14[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 20, 20, 64) 192 conv2d_16[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 20, 20, 96) 288 conv2d_19[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 20, 20, 64) 192 conv2d_20[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 20, 20, 96) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 20, 20, 64) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

activation_10 (Activation) (None, 20, 20, 96) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

activation_11 (Activation) (None, 20, 20, 64) 0 batch_normalization_11[0][0]

__________________________________________________________________________________________________

mixed_5b (Concatenate) (None, 20, 20, 320) 0 activation_5[0][0]

activation_7[0][0]

activation_10[0][0]

activation_11[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 20, 20, 32) 10240 mixed_5b[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 20, 20, 32) 96 conv2d_24[0][0]

__________________________________________________________________________________________________

activation_15 (Activation) (None, 20, 20, 32) 0 batch_normalization_15[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 20, 20, 32) 10240 mixed_5b[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 20, 20, 48) 13824 activation_15[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 20, 20, 32) 96 conv2d_22[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 20, 20, 48) 144 conv2d_25[0][0]

__________________________________________________________________________________________________

activation_13 (Activation) (None, 20, 20, 32) 0 batch_normalization_13[0][0]

__________________________________________________________________________________________________

activation_16 (Activation) (None, 20, 20, 48) 0 batch_normalization_16[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 20, 20, 32) 10240 mixed_5b[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 20, 20, 32) 9216 activation_13[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 20, 20, 64) 27648 activation_16[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 20, 20, 32) 96 conv2d_21[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 20, 20, 32) 96 conv2d_23[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 20, 20, 64) 192 conv2d_26[0][0]

__________________________________________________________________________________________________

activation_12 (Activation) (None, 20, 20, 32) 0 batch_normalization_12[0][0]

__________________________________________________________________________________________________

activation_14 (Activation) (None, 20, 20, 32) 0 batch_normalization_14[0][0]

__________________________________________________________________________________________________

activation_17 (Activation) (None, 20, 20, 64) 0 batch_normalization_17[0][0]

__________________________________________________________________________________________________

block35_1_mixed (Concatenate) (None, 20, 20, 128) 0 activation_12[0][0]

activation_14[0][0]

activation_17[0][0]

__________________________________________________________________________________________________

block35_1_conv (Conv2D) (None, 20, 20, 320) 41280 block35_1_mixed[0][0]

__________________________________________________________________________________________________

block35_1 (Lambda) (None, 20, 20, 320) 0 mixed_5b[0][0]

block35_1_conv[0][0]

__________________________________________________________________________________________________

block35_1_ac (Activation) (None, 20, 20, 320) 0 block35_1[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 20, 20, 32) 10240 block35_1_ac[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 20, 20, 32) 96 conv2d_30[0][0]

__________________________________________________________________________________________________

activation_21 (Activation) (None, 20, 20, 32) 0 batch_normalization_21[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 20, 20, 32) 10240 block35_1_ac[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 20, 20, 48) 13824 activation_21[0][0]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 20, 20, 32) 96 conv2d_28[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 20, 20, 48) 144 conv2d_31[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 20, 20, 32) 0 batch_normalization_19[0][0]

__________________________________________________________________________________________________

activation_22 (Activation) (None, 20, 20, 48) 0 batch_normalization_22[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 20, 20, 32) 10240 block35_1_ac[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 20, 20, 32) 9216 activation_19[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 20, 20, 64) 27648 activation_22[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 20, 20, 32) 96 conv2d_27[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 20, 20, 32) 96 conv2d_29[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 20, 20, 64) 192 conv2d_32[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 20, 20, 32) 0 batch_normalization_18[0][0]

__________________________________________________________________________________________________

activation_20 (Activation) (None, 20, 20, 32) 0 batch_normalization_20[0][0]

__________________________________________________________________________________________________

activation_23 (Activation) (None, 20, 20, 64) 0 batch_normalization_23[0][0]

__________________________________________________________________________________________________

block35_2_mixed (Concatenate) (None, 20, 20, 128) 0 activation_18[0][0]

activation_20[0][0]

activation_23[0][0]

__________________________________________________________________________________________________

block35_2_conv (Conv2D) (None, 20, 20, 320) 41280 block35_2_mixed[0][0]

__________________________________________________________________________________________________

block35_2 (Lambda) (None, 20, 20, 320) 0 block35_1_ac[0][0]

block35_2_conv[0][0]

__________________________________________________________________________________________________

block35_2_ac (Activation) (None, 20, 20, 320) 0 block35_2[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 20, 20, 32) 10240 block35_2_ac[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 20, 20, 32) 96 conv2d_36[0][0]

__________________________________________________________________________________________________

activation_27 (Activation) (None, 20, 20, 32) 0 batch_normalization_27[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 20, 20, 32) 10240 block35_2_ac[0][0]

__________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 20, 20, 48) 13824 activation_27[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 20, 20, 32) 96 conv2d_34[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 20, 20, 48) 144 conv2d_37[0][0]

__________________________________________________________________________________________________

activation_25 (Activation) (None, 20, 20, 32) 0 batch_normalization_25[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 20, 20, 48) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 20, 20, 32) 10240 block35_2_ac[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 20, 20, 32) 9216 activation_25[0][0]

__________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 20, 20, 64) 27648 activation_28[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 20, 20, 32) 96 conv2d_33[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 20, 20, 32) 96 conv2d_35[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 20, 20, 64) 192 conv2d_38[0][0]

__________________________________________________________________________________________________

activation_24 (Activation) (None, 20, 20, 32) 0 batch_normalization_24[0][0]

__________________________________________________________________________________________________

activation_26 (Activation) (None, 20, 20, 32) 0 batch_normalization_26[0][0]

__________________________________________________________________________________________________

activation_29 (Activation) (None, 20, 20, 64) 0 batch_normalization_29[0][0]

__________________________________________________________________________________________________

block35_3_mixed (Concatenate) (None, 20, 20, 128) 0 activation_24[0][0]

activation_26[0][0]

activation_29[0][0]

__________________________________________________________________________________________________

block35_3_conv (Conv2D) (None, 20, 20, 320) 41280 block35_3_mixed[0][0]

__________________________________________________________________________________________________

block35_3 (Lambda) (None, 20, 20, 320) 0 block35_2_ac[0][0]

block35_3_conv[0][0]

__________________________________________________________________________________________________

block35_3_ac (Activation) (None, 20, 20, 320) 0 block35_3[0][0]

__________________________________________________________________________________________________

conv2d_42 (Conv2D) (None, 20, 20, 32) 10240 block35_3_ac[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 20, 20, 32) 96 conv2d_42[0][0]

__________________________________________________________________________________________________

activation_33 (Activation) (None, 20, 20, 32) 0 batch_normalization_33[0][0]

__________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 20, 20, 32) 10240 block35_3_ac[0][0]

__________________________________________________________________________________________________

conv2d_43 (Conv2D) (None, 20, 20, 48) 13824 activation_33[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 20, 20, 32) 96 conv2d_40[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 20, 20, 48) 144 conv2d_43[0][0]

__________________________________________________________________________________________________

activation_31 (Activation) (None, 20, 20, 32) 0 batch_normalization_31[0][0]

__________________________________________________________________________________________________

activation_34 (Activation) (None, 20, 20, 48) 0 batch_normalization_34[0][0]

__________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 20, 20, 32) 10240 block35_3_ac[0][0]

__________________________________________________________________________________________________

conv2d_41 (Conv2D) (None, 20, 20, 32) 9216 activation_31[0][0]

__________________________________________________________________________________________________

conv2d_44 (Conv2D) (None, 20, 20, 64) 27648 activation_34[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 20, 20, 32) 96 conv2d_39[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 20, 20, 32) 96 conv2d_41[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 20, 20, 64) 192 conv2d_44[0][0]

__________________________________________________________________________________________________

activation_30 (Activation) (None, 20, 20, 32) 0 batch_normalization_30[0][0]

__________________________________________________________________________________________________

activation_32 (Activation) (None, 20, 20, 32) 0 batch_normalization_32[0][0]

__________________________________________________________________________________________________

activation_35 (Activation) (None, 20, 20, 64) 0 batch_normalization_35[0][0]

__________________________________________________________________________________________________

block35_4_mixed (Concatenate) (None, 20, 20, 128) 0 activation_30[0][0]

activation_32[0][0]

activation_35[0][0]

__________________________________________________________________________________________________

block35_4_conv (Conv2D) (None, 20, 20, 320) 41280 block35_4_mixed[0][0]

__________________________________________________________________________________________________

block35_4 (Lambda) (None, 20, 20, 320) 0 block35_3_ac[0][0]

block35_4_conv[0][0]

__________________________________________________________________________________________________

block35_4_ac (Activation) (None, 20, 20, 320) 0 block35_4[0][0]

__________________________________________________________________________________________________

conv2d_48 (Conv2D) (None, 20, 20, 32) 10240 block35_4_ac[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 20, 20, 32) 96 conv2d_48[0][0]

__________________________________________________________________________________________________

activation_39 (Activation) (None, 20, 20, 32) 0 batch_normalization_39[0][0]

__________________________________________________________________________________________________

conv2d_46 (Conv2D) (None, 20, 20, 32) 10240 block35_4_ac[0][0]

__________________________________________________________________________________________________

conv2d_49 (Conv2D) (None, 20, 20, 48) 13824 activation_39[0][0]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 20, 20, 32) 96 conv2d_46[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 20, 20, 48) 144 conv2d_49[0][0]

__________________________________________________________________________________________________

activation_37 (Activation) (None, 20, 20, 32) 0 batch_normalization_37[0][0]

__________________________________________________________________________________________________

activation_40 (Activation) (None, 20, 20, 48) 0 batch_normalization_40[0][0]

__________________________________________________________________________________________________

conv2d_45 (Conv2D) (None, 20, 20, 32) 10240 block35_4_ac[0][0]

__________________________________________________________________________________________________

conv2d_47 (Conv2D) (None, 20, 20, 32) 9216 activation_37[0][0]

__________________________________________________________________________________________________

conv2d_50 (Conv2D) (None, 20, 20, 64) 27648 activation_40[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 20, 20, 32) 96 conv2d_45[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 20, 20, 32) 96 conv2d_47[0][0]

__________________________________________________________________________________________________

batch_normalization_41 (BatchNo (None, 20, 20, 64) 192 conv2d_50[0][0]

__________________________________________________________________________________________________

activation_36 (Activation) (None, 20, 20, 32) 0 batch_normalization_36[0][0]

__________________________________________________________________________________________________

activation_38 (Activation) (None, 20, 20, 32) 0 batch_normalization_38[0][0]

__________________________________________________________________________________________________

activation_41 (Activation) (None, 20, 20, 64) 0 batch_normalization_41[0][0]

__________________________________________________________________________________________________

block35_5_mixed (Concatenate) (None, 20, 20, 128) 0 activation_36[0][0]

activation_38[0][0]

activation_41[0][0]

__________________________________________________________________________________________________

block35_5_conv (Conv2D) (None, 20, 20, 320) 41280 block35_5_mixed[0][0]

__________________________________________________________________________________________________

block35_5 (Lambda) (None, 20, 20, 320) 0 block35_4_ac[0][0]

block35_5_conv[0][0]

__________________________________________________________________________________________________

block35_5_ac (Activation) (None, 20, 20, 320) 0 block35_5[0][0]

__________________________________________________________________________________________________

conv2d_54 (Conv2D) (None, 20, 20, 32) 10240 block35_5_ac[0][0]

__________________________________________________________________________________________________

batch_normalization_45 (BatchNo (None, 20, 20, 32) 96 conv2d_54[0][0]

__________________________________________________________________________________________________

activation_45 (Activation) (None, 20, 20, 32) 0 batch_normalization_45[0][0]

__________________________________________________________________________________________________

conv2d_52 (Conv2D) (None, 20, 20, 32) 10240 block35_5_ac[0][0]

__________________________________________________________________________________________________

conv2d_55 (Conv2D) (None, 20, 20, 48) 13824 activation_45[0][0]

__________________________________________________________________________________________________

batch_normalization_43 (BatchNo (None, 20, 20, 32) 96 conv2d_52[0][0]

__________________________________________________________________________________________________

batch_normalization_46 (BatchNo (None, 20, 20, 48) 144 conv2d_55[0][0]

__________________________________________________________________________________________________

activation_43 (Activation) (None, 20, 20, 32) 0 batch_normalization_43[0][0]

__________________________________________________________________________________________________

activation_46 (Activation) (None, 20, 20, 48) 0 batch_normalization_46[0][0]

__________________________________________________________________________________________________

conv2d_51 (Conv2D) (None, 20, 20, 32) 10240 block35_5_ac[0][0]

__________________________________________________________________________________________________

conv2d_53 (Conv2D) (None, 20, 20, 32) 9216 activation_43[0][0]

__________________________________________________________________________________________________

conv2d_56 (Conv2D) (None, 20, 20, 64) 27648 activation_46[0][0]

__________________________________________________________________________________________________

batch_normalization_42 (BatchNo (None, 20, 20, 32) 96 conv2d_51[0][0]

__________________________________________________________________________________________________

batch_normalization_44 (BatchNo (None, 20, 20, 32) 96 conv2d_53[0][0]

__________________________________________________________________________________________________

batch_normalization_47 (BatchNo (None, 20, 20, 64) 192 conv2d_56[0][0]

__________________________________________________________________________________________________

activation_42 (Activation) (None, 20, 20, 32) 0 batch_normalization_42[0][0]

__________________________________________________________________________________________________

activation_44 (Activation) (None, 20, 20, 32) 0 batch_normalization_44[0][0]

__________________________________________________________________________________________________

activation_47 (Activation) (None, 20, 20, 64) 0 batch_normalization_47[0][0]

__________________________________________________________________________________________________

block35_6_mixed (Concatenate) (None, 20, 20, 128) 0 activation_42[0][0]

activation_44[0][0]

activation_47[0][0]

__________________________________________________________________________________________________

block35_6_conv (Conv2D) (None, 20, 20, 320) 41280 block35_6_mixed[0][0]

__________________________________________________________________________________________________

block35_6 (Lambda) (None, 20, 20, 320) 0 block35_5_ac[0][0]

block35_6_conv[0][0]

__________________________________________________________________________________________________

block35_6_ac (Activation) (None, 20, 20, 320) 0 block35_6[0][0]

__________________________________________________________________________________________________

conv2d_60 (Conv2D) (None, 20, 20, 32) 10240 block35_6_ac[0][0]

__________________________________________________________________________________________________

batch_normalization_51 (BatchNo (None, 20, 20, 32) 96 conv2d_60[0][0]

__________________________________________________________________________________________________

activation_51 (Activation) (None, 20, 20, 32) 0 batch_normalization_51[0][0]

__________________________________________________________________________________________________

conv2d_58 (Conv2D) (None, 20, 20, 32) 10240 block35_6_ac[0][0]

__________________________________________________________________________________________________

conv2d_61 (Conv2D) (None, 20, 20, 48) 13824 activation_51[0][0]

__________________________________________________________________________________________________

batch_normalization_49 (BatchNo (None, 20, 20, 32) 96 conv2d_58[0][0]

__________________________________________________________________________________________________

batch_normalization_52 (BatchNo (None, 20, 20, 48) 144 conv2d_61[0][0]

__________________________________________________________________________________________________

activation_49 (Activation) (None, 20, 20, 32) 0 batch_normalization_49[0][0]

__________________________________________________________________________________________________

activation_52 (Activation) (None, 20, 20, 48) 0 batch_normalization_52[0][0]

__________________________________________________________________________________________________

conv2d_57 (Conv2D) (None, 20, 20, 32) 10240 block35_6_ac[0][0]

__________________________________________________________________________________________________

conv2d_59 (Conv2D) (None, 20, 20, 32) 9216 activation_49[0][0]

__________________________________________________________________________________________________

conv2d_62 (Conv2D) (None, 20, 20, 64) 27648 activation_52[0][0]

__________________________________________________________________________________________________

batch_normalization_48 (BatchNo (None, 20, 20, 32) 96 conv2d_57[0][0]

__________________________________________________________________________________________________

batch_normalization_50 (BatchNo (None, 20, 20, 32) 96 conv2d_59[0][0]

__________________________________________________________________________________________________

batch_normalization_53 (BatchNo (None, 20, 20, 64) 192 conv2d_62[0][0]

__________________________________________________________________________________________________

activation_48 (Activation) (None, 20, 20, 32) 0 batch_normalization_48[0][0]

__________________________________________________________________________________________________

activation_50 (Activation) (None, 20, 20, 32) 0 batch_normalization_50[0][0]

__________________________________________________________________________________________________

activation_53 (Activation) (None, 20, 20, 64) 0 batch_normalization_53[0][0]

__________________________________________________________________________________________________

block35_7_mixed (Concatenate) (None, 20, 20, 128) 0 activation_48[0][0]

activation_50[0][0]

activation_53[0][0]

__________________________________________________________________________________________________

block35_7_conv (Conv2D) (None, 20, 20, 320) 41280 block35_7_mixed[0][0]

__________________________________________________________________________________________________

block35_7 (Lambda) (None, 20, 20, 320) 0 block35_6_ac[0][0]

block35_7_conv[0][0]

__________________________________________________________________________________________________

block35_7_ac (Activation) (None, 20, 20, 320) 0 block35_7[0][0]

__________________________________________________________________________________________________

conv2d_66 (Conv2D) (None, 20, 20, 32) 10240 block35_7_ac[0][0]

__________________________________________________________________________________________________

batch_normalization_57 (BatchNo (None, 20, 20, 32) 96 conv2d_66[0][0]

__________________________________________________________________________________________________

activation_57 (Activation) (None, 20, 20, 32) 0 batch_normalization_57[0][0]

__________________________________________________________________________________________________

conv2d_64 (Conv2D) (None, 20, 20, 32) 10240 block35_7_ac[0][0]

__________________________________________________________________________________________________

conv2d_67 (Conv2D) (None, 20, 20, 48) 13824 activation_57[0][0]

__________________________________________________________________________________________________